CVPR 2020 Tutorial on

Image Retrieval in the Wild

Time and venue

- Date and time

- June 19th (AM), 2020

- Venue

- This event is being held remotely on Zoom,

The Washington State Convention Center, Seattle, WA, U.S.

How to attend

- Go to the CVPR internal site to attend the tutorial: http://cvpr20.com/image-retrieval/. You can find the Zoom link there.

- All the presentation slides and videos are available from the links in the schedule section below

Overview

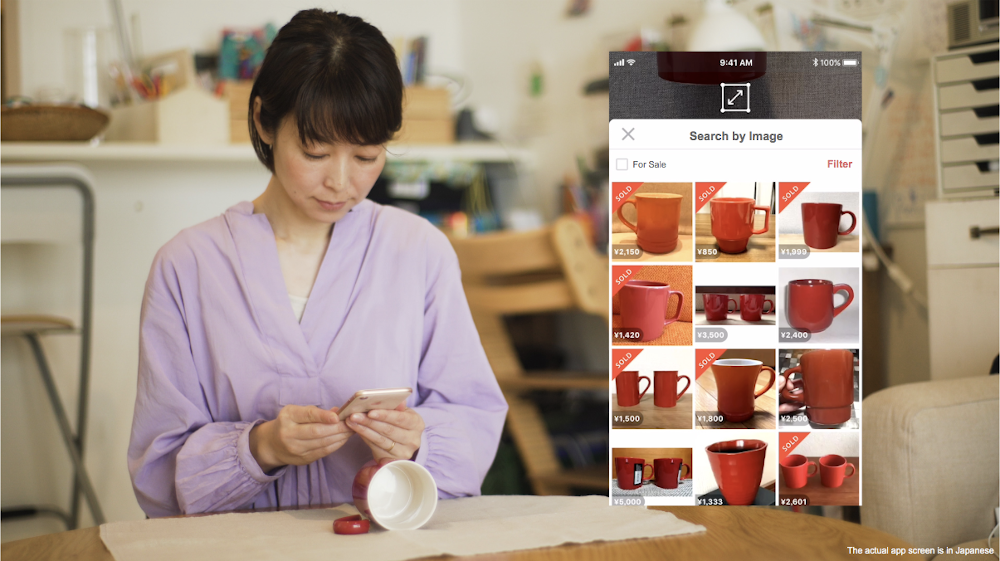

Content-based image retrieval is one of the most essential techniques used for interacting with visual collections. Although significant progress has been made in the last decade, existing technologies have only been evaluated on a standard benchmark such as the Oxford dataset, which mainly consists of building images. There has not been enough discussion about how to create a practical and large-scale visual search system for real-world applications, such as recommendation for shopping items in online marketplaces or re-identification for pedestrians in a security scenario.

This tutorial covers several important components of building an image retrieval system for real-world applications.

- First, we will review state-of-the-art algorithms of approximate nearest neighbor search. The design of the search algorithm is critical for performance. We will provide a practical guide to select the best algorithm for the given task.

- In the second part, we will present an example of how such an algorithm is utilized in an online C2C marketplace app, which has over one billion listings and over 15 million monthly active users. Specifically, we will show how we productionize a highly scalable and available visual search system on Kubernetes for the app.

- In the third part, we will conduct a systematic review for heterogeneous person re-identification, where the inter-modality discrepancy works as the main challenge. We consider four cross-modality application scenarios: low-resolution (LR), infrared (IR), sketch, and text. We will introduce and organize the available datasets in each category, and will summarize and compare the representative approaches.

- Finally, we will provide a live-coding demo to implement an image search engine from scratch. By leveraging a pre-trained deep model, we will show that a web-based search system can be easily achieved with only 100 lines of Python code.

Schedule

- 08:30 - 09:15 (Seattle) / 17:30 - 18:15 (Paris) / 24:30 - 25:15 (Tokyo)

- Opening - Yusuke Matsui, Takuma Yamaguchi, Zheng Wang slides

- Billion-scale Approximate Nearest Neighbor Search - Yusuke Matsui slides video

- 09:15 - 10:00 (Seattle) / 18:15 - 19:00 (Paris) / 25:15 - 26:00 (Tokyo)

- A Large-scale Visual Search System in the C2C Marketplace App Mercari - Takuma Yamaguchi slides video promo video

- 10:00 - 10:30 (Seattle) / 19:00 - 19:30 (Paris) / 26:00 - 26:30 (Tokyo)

- Coffee break

- 10:30 - 11:15 (Seattle) / 19:30 - 20:15 (Paris) / 26:30 - 27:15 (Tokyo)

- Beyond Intra-modality Discrepancy: A Survey of Heterogeneous Person Re-identification - Zheng Wang slides video

- 11:15 - 12:00 (Seattle) / 20:15 - 21:00 (Paris) / 27:15 - 28:00 (Tokyo)

- Live-coding Demo to Implement an Image Search Engine from Scratch - Yusuke Matsui slides code demo video

Playlist for the all recorded video

Organizers

Yusuke Matsui

The University of Tokyo

Takuma Yamaguchi

Mercari Inc.

Zheng Wang

National Institute of Informatics

BibTeX

@misc{cvpr20_tutorial_image_retrieval,

author = {Yusuke Matsui and Takuma Yamaguchi and Zheng Wang},

title = {CVPR2020 Tutorial on Image Retrieval in the Wild},

howpublished = {\url{https://matsui528.github.io/cvpr2020_tutorial_retrieval/}},

year = {2020}

}